The Basics of BigQuery Costs

Managing costs in BigQuery begins with understanding the fundamentals. BigQuery charges users primarily for storage and processing, each with unique considerations that affect the total spend. Let’s dive into the details of each.

Storage Costs

BigQuery storage charges are based on the amount of data stored, measured in terabytes per month. Storage costs are split into two categories:

- Active storage: Data that has been updated within the past 90 days is billed at the standard rate.

- Long-term storage: Data that hasn’t been modified in over 90 days receives a discounted storage rate.

To further optimize storage costs, you can choose between billing by physical size (the total bytes stored) or logical size (the compressed, deduplicated data size). This choice allows you to tailor the storage cost based on how data is queried and structured, which we’ll cover in detail in an upcoming optimization section.

Processing Costs

BigQuery processing charges are based on two possible pricing models:

- Slot Reservations (available in BigQuery Editions): By reserving a set number of compute slots, you secure processing capacity at a fixed rate, with the option to auto-scale as demand grows.

- On-Demand Processing: Charges per terabyte processed in each query, ideal for irregular or smaller workloads that don’t require continuous compute resources.

Understanding these core cost drivers is the first step toward controlling and optimizing BigQuery expenses.

Enhancing Visibility: Using Labels and Metadata in dbt

A key part of managing BigQuery costs is maintaining clear visibility into expenses across different projects, models, and operations. Consistent labeling of operations and resources helps track costs down to specific workflows, teams, or individual models. However, manually labeling each job can be tedious and prone to error, especially in complex data environments.

This is where dbt’s metadata features can simplify the process with automated labeling.

Labeling Operations in dbt

In dbt, you can configure automated labels that are passed to BigQuery, enabling detailed tracking of workload costs. Here’s how you can set it up in the dbt_project.yml configuration file:

Copied!query-comment: comment: "{{ bq_labels(node, var) }}" job-label: True

Next, use a macro for automatic labeling to apply relevant metadata for each operation:

Copied!{% macro bq_labels(node, var) %} {%- set comment_dict = {} -%} {%- do comment_dict.update( app='background_tasks', customer=var('customer', 'test'), repository="analytics", processed_by=env_var('username','cloud_run_task'), profile_name=target.get('profile_name'), target_name=target.get('target_name') ) -%} {%- if node is not none -%} {%- do comment_dict.update( file=node.original_file_path, node_id=node.unique_id, node_name=node.name, resource_type=node.resource_type, package_name=node.package_name, database=node.database, schema=node.schema ) -%} {%- endif -%} {% do return(tojson(comment_dict)) %} {% endmacro %}

With this setup, dbt will label each query, making it easier to:

- Track Costs by Model: Monitor which models are driving up costs with targeted queries. For example, you can analyze the data using the following SQL query in BigQuery:

Copied!SELECT ARRAY((SELECT value FROM UNNEST(labels) WHERE key = "label_name"))[SAFE_OFFSET(0)] AS label, SUM(total_slot_ms), SUM(total_bytes_billed) FROM ``.`region-eu`.INFORMATION_SCHEMA.JOBS_BY_PROJECT WHERE EXTRACT(DATE FROM creation_time) = CURRENT_DATE() AND ARRAY((SELECT value FROM UNNEST(labels) WHERE key = "label_name"))[SAFE_OFFSET(0)] IS NOT NULL GROUP BY 1

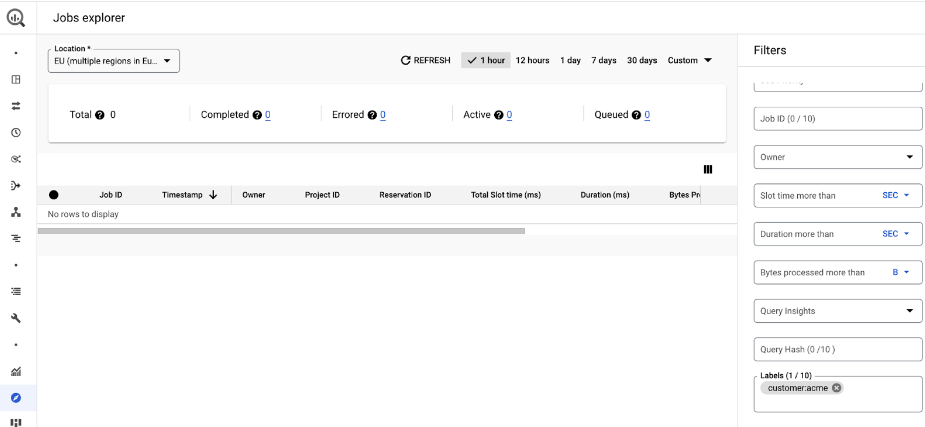

- Simplify Debugging: Use labels to filter specific jobs in the BigQuery UI job explorer for faster troubleshooting.

- Optimize Resource Usage: Identify which models are causing auto-scaling in slot reservations, allowing you to adjust for greater efficiency.

Labeling Storage Resources

In addition to labeling operations, you can also configure storage-level labels by applying labels at either the dbt_project.yml level or the individual model level. Note that dbt_project.yml labels will override any model-level labels if both are set.

For instance, to set labels directly on a model:

Copied!{{ config( tags=["ingestion"], materialized='table', labels={'maintained_by': 'team_A'} ) }}

To query labels on tables at the storage level, you can use a query like this in BigQuery:

Copied!SELECT *, ARRAY((SELECT AS STRUCT json[0] AS key, json[1] AS value FROM UNNEST(ARRAY( (SELECT AS STRUCT JSON_VALUE_ARRAY(CONCAT('[', elements, ']')) AS json FROM UNNEST(regexp_extract_all(option_value, r'STRUCT\("[^"]+", "[^"]+"\)')) elements) )))) AS labels FROM .INFORMATION_SCHEMA.TABLE_OPTIONS WHERE option_name = 'labels'

This will return the labels applied to tables, which can be extremely useful for breaking down storage costs by dataset or table owner.

Takeaway

By understanding the basics of BigQuery costs and applying dbt’s metadata capabilities, you can track and control your expenses more effectively. Implementing labels and monitoring these details will set the foundation for a more efficient, cost-aware data workflow.

Stay tuned for the next episode, where we’ll explore advanced BigQuery cost optimization techniques to further manage and reduce expenses.